9. Practicalities#

In the past weeks of the course you have had the opportunity to familiarize yourself with the nuts and bolts of Monte Carlo simulations and have tested your skills with the computer assignments in the Jupyter notebooks. In doing so, especially for the computation intensive models of the last few weeks, you probably have contemplated whether the process could not be organized more efficiently: perhaps you were running the simulation in the notebook and had to wait for it to finish before continuing with the analysis; or you hit the time limit on the JupyterHub session; or the different pieces of code you have written (and rewritten, adapted, patched,…) are scattered in a non-linear fashion in the notebook cells; or…. If you have not encountered these limitations yet, you will certainly face them in the project you are about to commence. It is therefore a good idea to discuss some organizational aspects of running a medium to large scale simulation project.

9.1. Coding#

9.1.1. An example: the 2D Ising model#

Let us go through an explicit example: simulating the 2D Ising model with the Wolff algorithm as we did in week 6. The first thing we should do is make a stand-alone program that can perform the required simulation. For this we can use the Python script ising.py available in the folder ising_example.

import numpy as np

from collections import deque

import argparse

import time

import h5py

rng = np.random.default_rng()

# Ising model code from week 6

def aligned_init_config(width):

'''Produce an all +1 configuration.'''

return np.ones((width,width),dtype=int)

def neighboring_sites(s,w):

'''Return the coordinates of the 4 sites adjacent to s on an w*w lattice.'''

return [((s[0]+1)%w,s[1]),((s[0]-1)%w,s[1]),(s[0],(s[1]+1)%w),(s[0],(s[1]-1)%w)]

def cluster_flip(state,seed,p_add):

'''Perform a single Wolff cluster move with specified seed on the state with parameter p_add.'''

w = len(state)

spin = state[seed]

state[seed] = -spin

cluster_size = 1

unvisited = deque([seed]) # use a deque to efficiently track the unvisited cluster sites

while unvisited: # while unvisited sites remain

site = unvisited.pop() # take one and remove from the unvisited list

for nbr in neighboring_sites(site,w):

if state[nbr] == spin and rng.uniform() < p_add:

state[nbr] = -spin

unvisited.appendleft(nbr)

cluster_size += 1

return cluster_size

def wolff_cluster_move(state,p_add):

'''Perform a single Wolff cluster move on the state with addition probability p_add.'''

seed = tuple(rng.integers(0,len(state),2))

return cluster_flip(state,seed,p_add)

def run_ising_wolff_mcmc(state,p_add,n):

'''Run n Wolff moves on state and return total number of spins flipped.'''

total = 0

for _ in range(n):

total += wolff_cluster_move(state,p_add)

return total

def compute_magnetization(config):

'''Compute the magnetization M(s) of the state config.'''

return np.sum(config)

# use the argparse package to parse command line arguments

parser = argparse.ArgumentParser(description='Measures the magnetization in the 2D Ising model')

parser.add_argument('-w', type=int, help='Lattice size W')

parser.add_argument('-t', type=float, help='Temperature T (in units where J=kB=1)')

parser.add_argument('-n', type=int, help='Number N of measurements (indefinite by default)')

parser.add_argument('-e', type=int, default=100, help='Number E of equilibration sweeps')

parser.add_argument('-m', type=int, default=10, help='Number M of sweeps per measurement')

parser.add_argument('-o', type=int, default=30, help='Time in seconds between file outputs')

parser.add_argument('-f', help='Output filename')

args = parser.parse_args()

# perform sanity checks on the arguments

if args.w is None or args.w < 1:

parser.error("Please specify a positive lattice size!")

if args.t is None or args.t <= 0.0:

parser.error("Please specify a positive temperature!")

if args.e < 10:

parser.error("Need at least 10 equilibration sweeps to determine the average cluster size")

# fix parameters

temperature = args.t

p_add = 1 - np.exp(-2/temperature)

width = args.w

if args.f is None:

# construct a filename from the parameters plus a timestamp (to avoid overwriting)

output_filename = "data_w{}_t{}_{}.hdf5".format(width,temperature,time.strftime("%Y%m%d%H%M%S"))

else:

output_filename = args.f

# equilibration

state = aligned_init_config(width)

total_spin_flips = 0

total_moves = 0

equilibration_target = args.e * width * width

while total_spin_flips < equilibration_target:

total_spin_flips += wolff_cluster_move(state,p_add)

total_moves += 1

average_cluster_size = total_spin_flips / total_moves

# measurement phase

moves_per_measurement = int(args.m * width * width / average_cluster_size)

measurements = 0

start_time = time.time()

last_output_time = time.time()

with h5py.File(output_filename,'w') as f:

# create 1D integer data set that is stored in chunks and can be extended

dataset = f.create_dataset("magnetizations",(0,), maxshape=(None,), dtype='i4', chunks=True)

# store some information as metadata for the data set

dataset.attrs["parameters"] = str(vars(args))

dataset.attrs["lattice size"] = width

dataset.attrs["temperature"] = temperature

dataset.attrs["equilibration sweeps"] = args.e

dataset.attrs["average cluster size"] = average_cluster_size

dataset.attrs["start time"] = time.asctime()

dataset.attrs["moves per measurement"] = moves_per_measurement

dataset.attrs["current_time"] = measurements

magnetizations = []

while True:

run_ising_wolff_mcmc(state,p_add,moves_per_measurement)

magnetizations.append(compute_magnetization(state))

measurements += 1

if measurements == args.n or time.time() - last_output_time > args.o:

# time to output data again

with h5py.File(output_filename,'a') as f:

# enlarge the data set

f["magnetizations"].resize(measurements, axis=0)

# copy the data to the new space at the end of the dataset

f["magnetizations"][-len(magnetizations):] = magnetizations

f["magnetizations"].attrs["current_time"] = time.asctime()

f["magnetizations"].attrs["measurements"] = measurements

magnetizations.clear()

if measurements == args.n:

break

else:

last_output_time = time.time()

Let me highlight two features that are new compared to what we are used to in the Jupyter notebooks:

- Command line arguments

It uses the

argparsepackage to read the simulation parameters from the command line. This has the great advantage that we can change all parameters without changing the source code. For instance, if we wish to run a simulation of the Ising model on a 20x20 grid at temperature \(T=2.5\) and obtain \(2000\) samples we would run$ python3 ising.py -w 20 -t 2.5 -n 2000 -f data_example.hdf5

and the output would be stored in the file

data_example.hdf5. Note that there are several more optional command line parameters one can set (equilibration time, frequency of writing to disk output file, …) but they are assigned default values for convenience. Runningpython3 ising.py --helpwill list all possible parameters.- Storing results periodically

The simulation results are stored in an HDF5 file, like we did in the exercises. This is done at the very end of the simulation when the desired number of measurements has been performed, but also periodically every 30 seconds (but this you can set with the parameters). It has the advantage that you can monitor the progress of the simulation and kill the script at any time without losing much data. Note that we do not just save the observables but also the command line parameters and various other quantities that may be of interest later in metadata of the data set. In general, it is good practice to store all information necessary to reproduce the data.

Now suppose we have run our simulation with the command line above. We can then easily read the data in this notebook and perform data analysis.

import h5py

import numpy as np

def batch_estimate(data,observable,k):

'''Devide data into k batches and apply the function observable to each.

Returns the mean and standard error.'''

batches = np.reshape(data,(k,-1))

values = np.apply_along_axis(observable, 1, batches)

return np.mean(values), np.std(values)/np.sqrt(k-1)

with h5py.File("ising_example/data_example.hdf5",'r') as f:

num_spins = f["magnetizations"].attrs["lattice size"]**2

mean_magnetization = batch_estimate(np.abs(f["magnetizations"][:])/num_spins,np.mean,20)

print("Estimate for w = {}, T = {}, and {} measuremenst: E[|m|] = {:.4f} +- {:.4f}".format(

f["magnetizations"].attrs["lattice size"],

f["magnetizations"].attrs["temperature"],

f["magnetizations"].attrs["measurements"],

*mean_magnetization))

Estimate for w = 20, T = 2.5, and 2000 measuremenst: E[|m|] = 0.3147 +- 0.0046

9.2. Data gathering#

Once you are confident that your simulation code works as intended and you have a good idea of the parameter ranges you would like to collect data for, you are at a stage to scale up and invest some serious cpu time. Of course you could occupy all cores on your pc or laptop and take a nap, but there is a more practical alternative: the compute cluster of our faculty. Those that have signed up for this course, will have been granted access to the High Energy Physics cluster nodes (node = single machine). Running jobs on the cluster nodes requires some preparations, since they are to be submitted through the SLURM job scheduling system. Don’t worry, this is not complicated!

9.2.1. Logging in to one of the compute nodes#

First you will have to login with ssh into one of the compute nodes using a terminal. There are several ways to do this.

- JupyterHub terminal

The first option is to use the terminal interface of JupyterHub in the browser. Simply head to the file system page and select

New(top-right corner) andTerminal. This will bring up a browser tab with a direct ssh interface with to compute node on which your jupyter session is running.- From faculty-managed linux machine

If you are on a faculty-managed linux machine, you can simply open the built-in Terminal (

Ctrl + Alt + T).- From your own computer

From your own computer you can use the built-in Terminal on linux or MacOS, or PuTTy on Windows, but you first have to login into one of the faculties login-servers, e.g.

$ ssh yourusername@lilo.science.ru.nl

which will prompt for your science password.

Then login into one of the cluster nodes (cn29, cn44, cn96, cn97, cn98, cn110, cn111, cn112, cn113) by running e.g.

$ ssh cn110

If your access is denied, please contact the course coordinator because you may not have been added to the right unix groups yet. The command sinfo will provide information on the SLURM partitions to which you have been granted access. It will look something like this:

$ sinfo

PARTITION AVAIL TIMELIMIT NODES STATE NODELIST

hefstud up infinite 4 mix cn[110-113]

hefstud up infinite 3 alloc cn[96-98]

hefstud up infinite 3 idle cn[26,29,44]

cncz up 7-00:00:00 1 idle cn13

jupyterhub up 4:00:00 3 idle cn[107,118,119]

The relevant partition is hefstud (for High Energy Physics students). As you see it lists the 10 cluster nodes with an indication of the status (in this example cn96, cn97, … are fully occupied and cn110, cn111, … partially, while cn26, cn29, … are presently not running any jobs). The currently running and queued jobs can be displayed with squeue. Note: hefstud is not the only partition using these machines, so the machines may be occupied even if squeue does not show any running jobs.

9.2.2. Submitting a job script#

Now we wish to perform our simulation on the cluster node. You could execute it in the terminal by running python3 ising.py ..., but please do not do this (unless to quickly test if the code will run on this machine)! The proper way is to submit your job as a SLURM task. For this you should prepare a simple bash script. Let us assume the script ising.py is located in the folder monte-carlo-techniques/_sources/lectures/ising_example in your science home directory (which will be the case if you use the gitpuller link). A minimal version of the bash script would look like this and we would save it in a file ising_job.sh.

#!/bin/bash

#SBATCH --partition=hefstud

#SBATCH --output=std_%A_%a.txt

#SBATCH --mem=100M

#SBATCH --time=2:00:00

cd ~/monte-carlo-techniques/_sources/lectures/ising_example

python3 ising.py -w 16 -t 2.5 -n 100

The lines starting with #SBATCH tell SLURM what resources are requested and how to administrate the job. Note that they are not part of the script itself because # starts a comment line in bash.

In particular, we are telling SLURM that the job should run on the

hefstudpartition (so on one of its 10 nodes).Any output generated by your script together with the job details will be stored in a file whose name depends on the job id (but you could specify a filename yourself if you wish).

The

--mem=100Mtells SLURM that you plan not to use more than 100MB of memory.The

--time=2:00:00option specifies the maximal run time of your script before it will be killed (in this case 2 hours). You can specify a maximal run time of, say, 3 days using the format--time=3-0:00:00.

The lines that do not start with # are commands that will be executed, in this case the working directory is set to the folder in your home directory where the Ising model script is located and then the python script is executed.

To submit the job simply run the following command in the terminal.

$ sbatch ising_job.sh

If the job does not finish (almost) instantaneously you will see it in the job queue.

$ squeue -u yourusername

JOBID PARTITION NAME USER ST TIME NODES NODELIST(REASON)

3785215 hefstud isingjob username R 0:10 1 cn110

This means that the scheduler has found a node (cn110) with sufficient resources to execute your job, but it can also happen that the job remains queued until resources are available. The job will disappear as soon as your script has finished. If necessary you can kill the job before it is completed by calling scancel with the job id as parameter.

$ scancel 3785215

9.2.3. Running multiple jobs#

Most often you will want to run many jobs with the same script, but each with different parameters. For this you could produce many bash scripts (possibly in an automated fashion), but there are other options as well. If you wish to run the scripts sequentially, you can simply use a for loop in the bash script. For instance, the following script (ising_job_w10.sh) will execute the script for temperatures 1.5, 1.6, …, 3.5 one after the other.

#!/bin/bash

#SBATCH --partition=hefstud

#SBATCH --output=std_%A_%a.txt

#SBATCH --mem=100M

#SBATCH --time=2:00:00

cd ~/monte-carlo-techniques/_sources/lectures/ising_example

for temp in $(LANG=en_US seq 1.5 0.1 3.5)

do

python3 ising.py -w 10 -t ${temp} -n 20

done

If you want to run several of these jobs simultaneously, you could use a job array. This is done by including an instruction like #SBATCH --array=0-20%3 which tells SLURM to turn the job submissions into 21 individual jobs (with index 0,1,…,20), but never to run more than 3 at the same time. The index of the job is accessible as $SLURM_ARRAY_TASK_ID and can be used to determine the parameters for each individual job, like in the next job (ising_job_w20.sh).

#!/bin/bash

#SBATCH --partition=hefstud

#SBATCH --output=std_%A_%a.txt

#SBATCH --mem=100M

#SBATCH --time=2:00:00

#SBATCH --array=0-20%3

cd ~/monte-carlo-techniques/_sources/lectures/ising_example

temperatures=($(LANG=en_US seq 1.5 0.1 3.5))

temp=${temperatures[$SLURM_ARRAY_TASK_ID]}

python3 ising.py -w 20 -t ${temp} -n 200

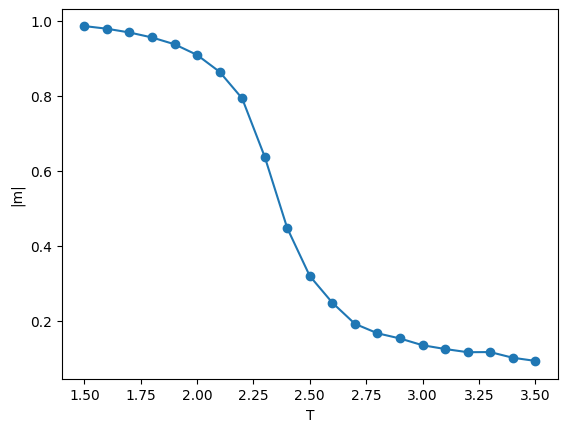

We can then read all data files and perform analysis in this notebook:

import matplotlib.pylab as plt

%matplotlib inline

import glob

filenames = sorted(glob.glob('ising_example/data_w20_*.hdf5'))

mean_magnetizations = []

for filename in filenames:

with h5py.File(filename,'r') as f:

num_spins = f["magnetizations"].attrs["lattice size"]**2

mean_magnetizations.append([f["magnetizations"].attrs["temperature"],

*batch_estimate(np.abs(f["magnetizations"][:])/num_spins,np.mean,20)])

plt.errorbar([m[0] for m in mean_magnetizations],[m[1] for m in mean_magnetizations],

yerr=[m[2] for m in mean_magnetizations],fmt='-o')

plt.xlabel("T")

plt.ylabel("|m|")

plt.show()

9.2.4. Remarks#

- Be considerate to other users.

The department’s compute nodes are shared between research staff and interns and students from this course. CPU resources and memory are limited. Feel free to occupy up to ~5 cores full time or up to ~20 cores for short periods (hours) when the server load is low, but try at all times to leave resources available to others. Be prepared to kill your jobs, if requested by my colleagues or me.

- Have your scripts write partial results to disk periodically.

This way the job becomes as cancelable as possible and you have the opportunity to inspect progress. You want to prevent the situation that you have submitted a script that samples a million configurations for a dozen different model parameters and that only writes the results to a file at the very end. Perhaps the job takes more time than you anticipated: if an expected 12-hour job is not finished after two days and you don’t know its progress, do you wait another day or kill the job? If for whatever reason you have to kill your script before it has finished, you could end up with no data at all. It is therefore advisable to store partial results periodically throughout the simulation.

- Only write files in your home directory.

The cluster nodes have local storage (scratch), but for the purposes of your project you will probably not need it. Given that scratch is not backed up and its contents differs from one node to the other, I would advise you to stick to your own home directory.